AI is a powerful force driving the design of computer architectures. Impacts include both an explosion of new startups and hardware designs and rapid evolutionary change in all platforms. Although these investments are impressive, most of the activities focus on consumer or enterprise areas such as autonomous driving, social networks, finance, and virtual reality, all of which generate models from massive numbers of small, labeled data items (e.g., pictures). DOE mission areas are aimed at computational science with HPC and experimental data, where the dataset can be drastically different: hundreds of simulations or experiments with dozens of dimensions, rather than millions of photos. Argonne is developing a focused strategy to shape AI hardware to serve its science mission, leveraging community and industry investments in technology and scalable, intermediate, and low-power edge systems for AI.

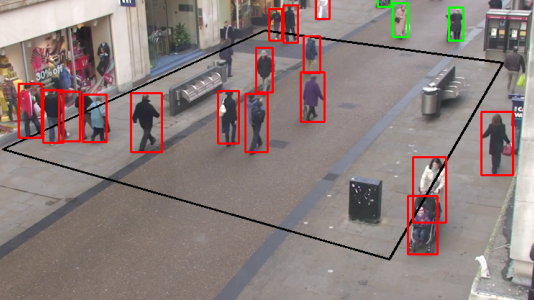

Argonne operates many distributed facilities, such as the ARM Climate Research Facility, comprising sensors and instruments across the planet. Such instruments produce vast quantities of data that often cannot be efficiently moved to or stored in a central repository. Moving a portion of the data analysis pipeline “to the edge,” where the data are generated, allows the required computation to identify the highest-value data to be saved and autonomously respond and control the experiment. Advances in AI and machine learning are among the enablers of edge computing, facilitating decision making and interpreting data from multiple sensors. Edge computing is possible, even with relatively low-powered computing hardware, because a large body of training data has been processed on high-performance servers into machine learning models that can be deployed at the edge.