Argonne’s Robotics and Augmented-Reality Laboratory develops innovative robotics technologies in support of programs cross-cutting diverse target application areas including manufacturing, materials development, energy and nuclear industry, and field and service robotics. The innovations leverage technology basis in:

- Robotics, telerobotics, and human-robot Interaction;

- Real-time sensing and reconstruction;

- Virtual-Reality (VR) simulation;

- Multi-modal Augmented-Reality (AR); and

- Cyber-physical system based on Robot Operating System (ROS).

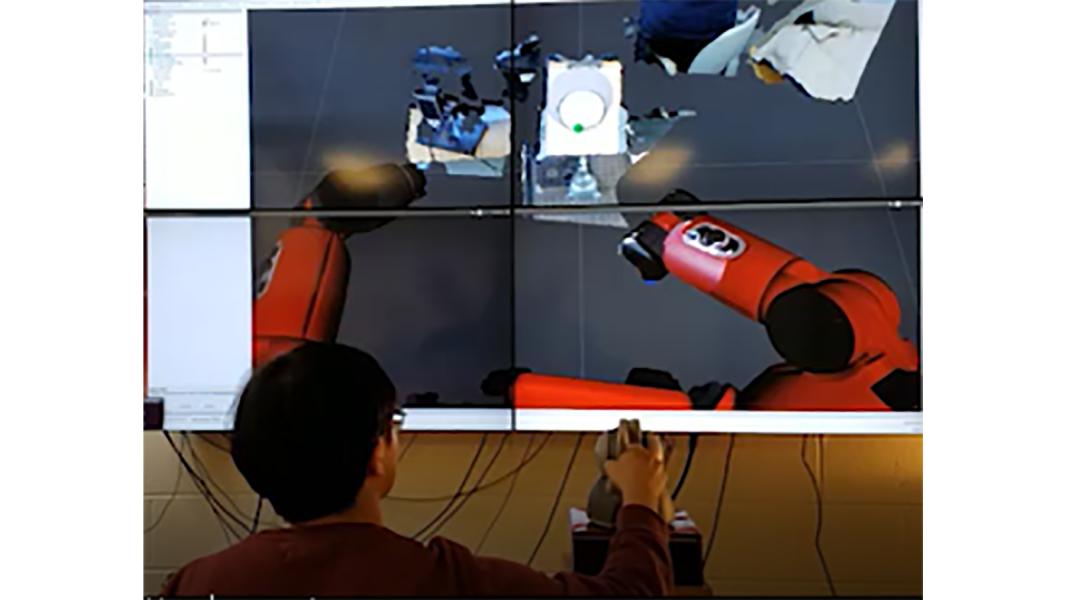

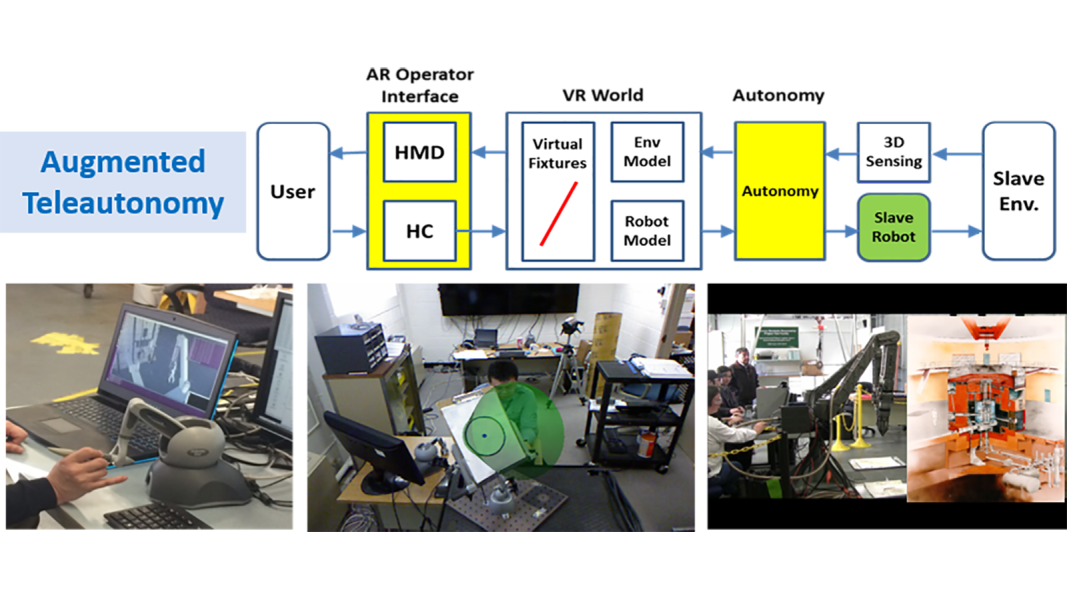

AR Telerobotics System: We have focused on development of enhanced remote operation technology for nuclear applications by incorporating AR and robot autonomy. In augmented teleoperation, artificial ‘virtual fixtures’ are generated in the operator interface to simplify and enhance precision of teleoperation. In teleautonomy, the robot’s autonomous behaviors are blended with manual commands to facilitate efficient remote operation. These technology innovations are currently applied for decontamination and decommissioning of nuclear facilities.

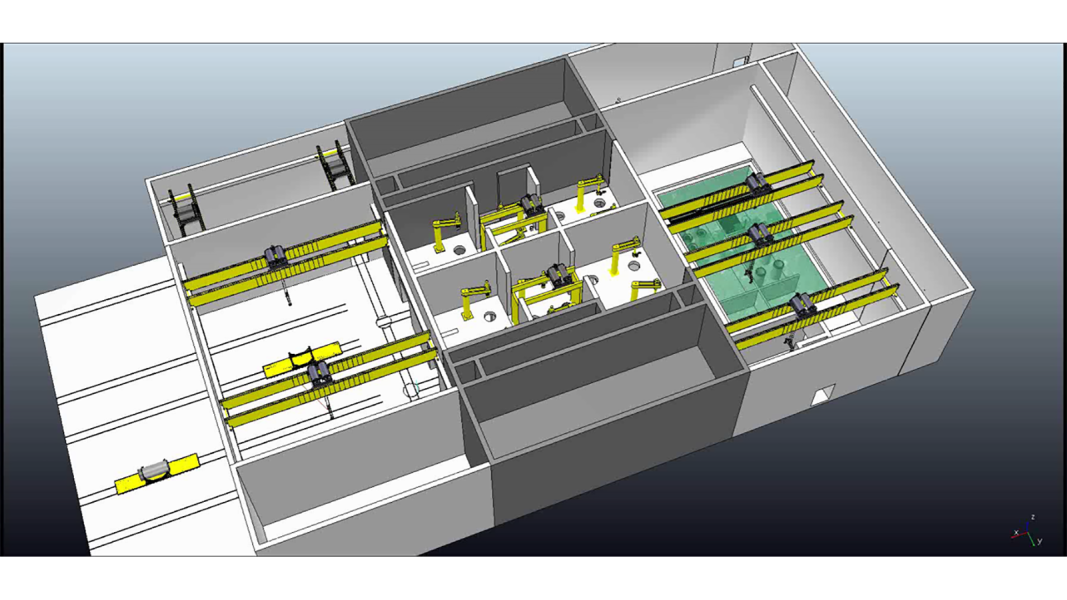

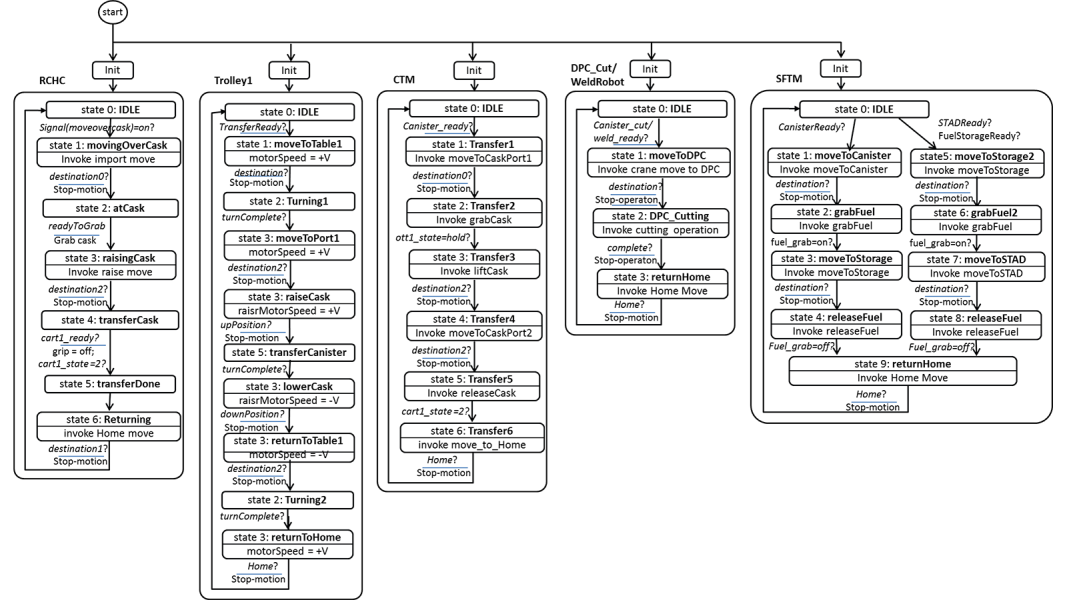

Digital (Virtual) Simulation: Design and development of robotic and remote systems for automated facilities is complex and an expensive process. To mitigate such concerns, the recent advances in VR technology can be utilized to design iterate, optimize, and validate the construction and operation digitally without building physical prototypes. We have developed virtual modeling and simulation capabilities for various nuclear and manufacturing facilities, reducing the cost and time needed for physical prototypes.

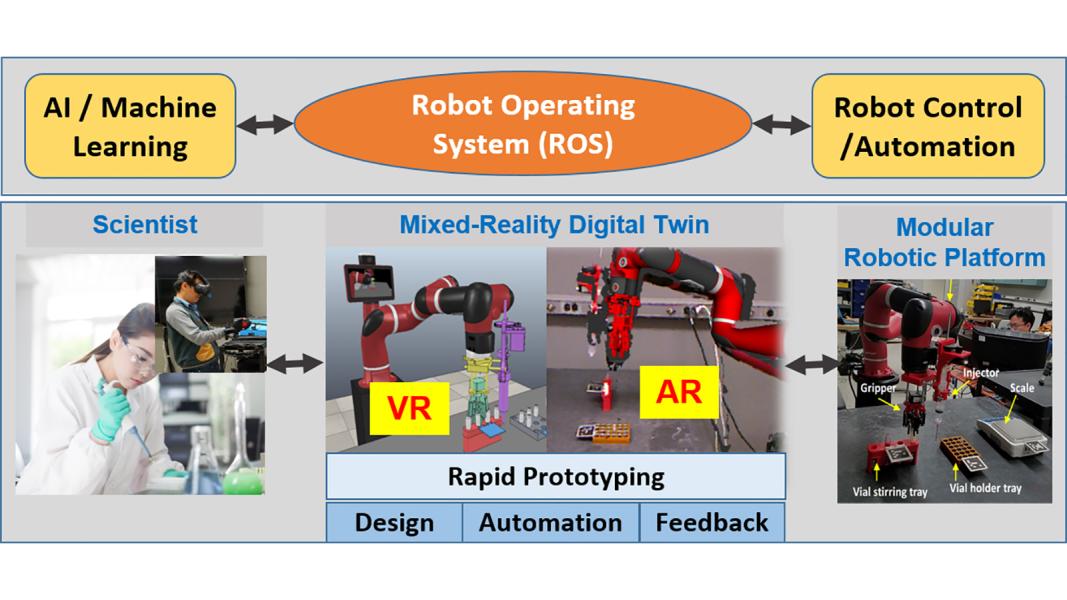

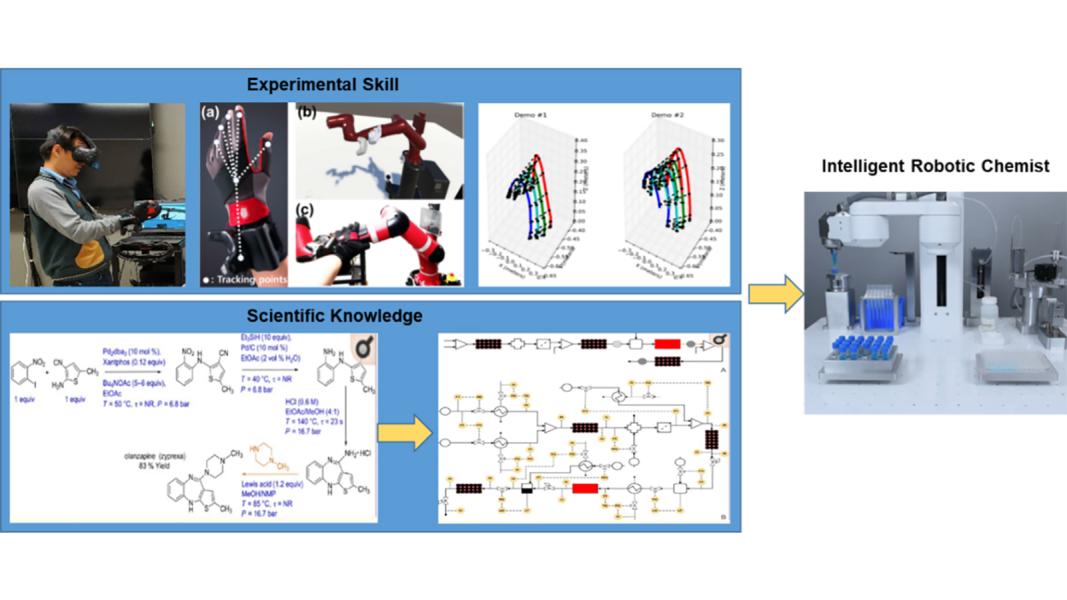

Robotics for Autonomous Materials Discovery: A robotic platform is developed for autonomous materials discovery and intelligent scientific process. It incorporates a mixed-reality (MR) platform which serves as rapid prototyping platform which encompasses end-to-end materials development process of design, synthesis, and feedback optimization, as well as human-machine interface.

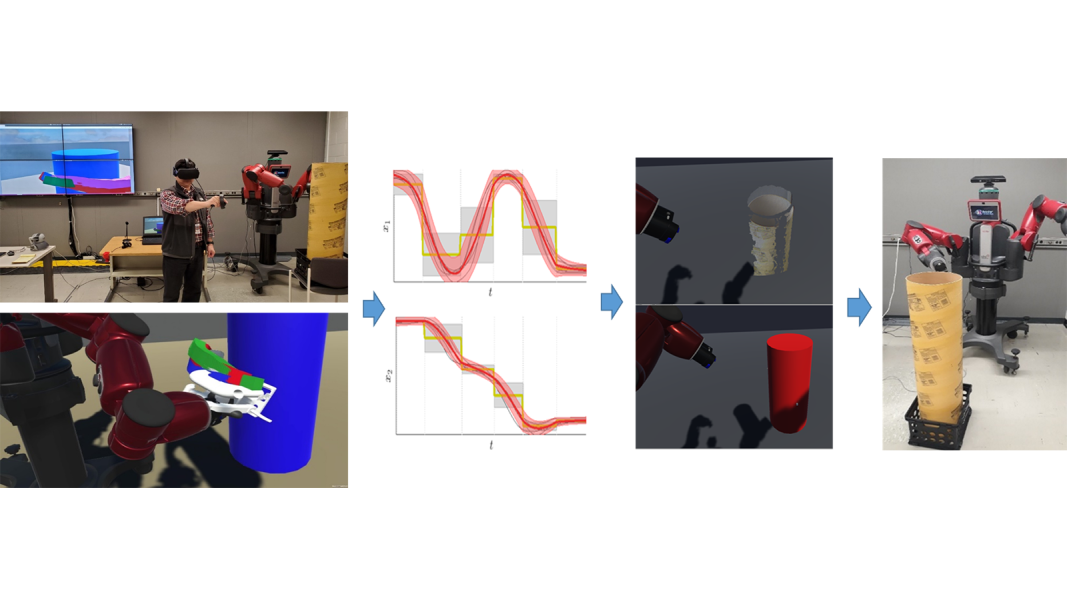

Programming by Demonstration: We are developing intelligent methods to analyze and provide automation solutions for difficult, complex, and vague applications. For example, we have developed a new way of robot programming-by-demonstration (PbD) based on machine learning. In this method, task skill (motion primitives) is extracted by observing human demonstration, and then adapted to the task environment observed from real-time sensing. This technology is applied for autonomous materials synthesis and nuclear facility dismantling processes.

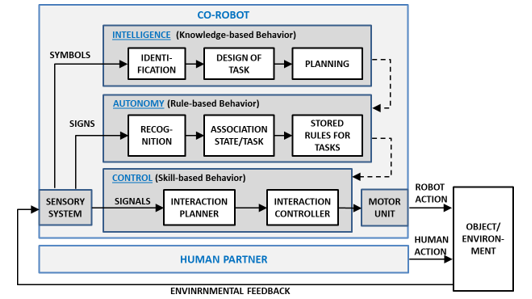

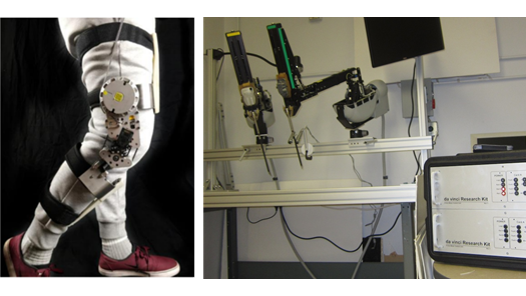

Human-robot Interaction: We have worked on developing haptic skin sensors to fit on robotic devices and physical human-robot interaction control. This R&D has broad potential applications in the development of next generation human-collaborative robot systems, for example industrial co-robots, wearable assistive devices, and medical telerobotic systems.

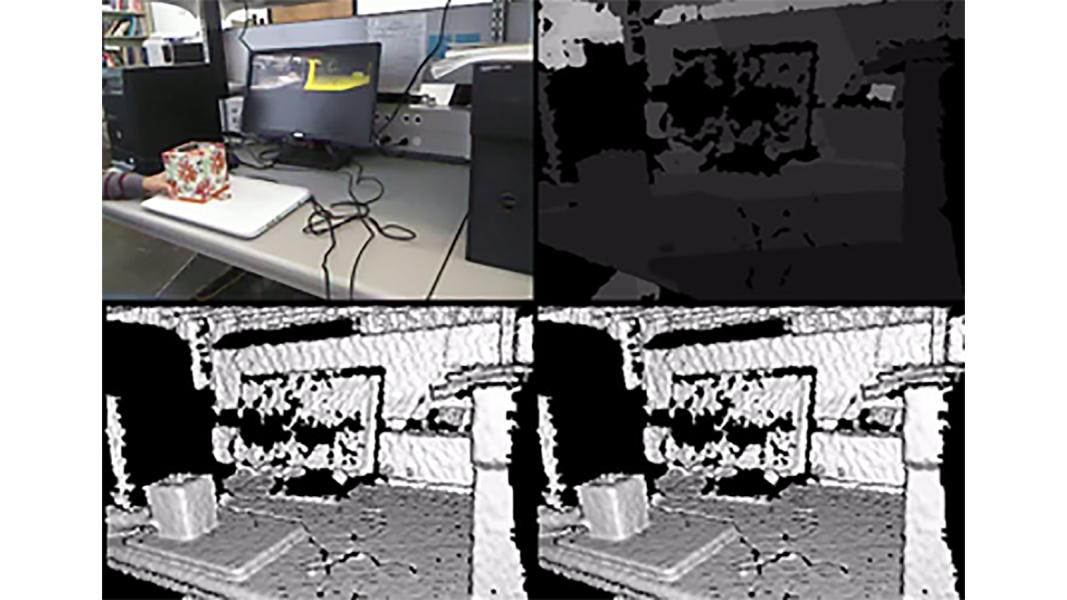

3D Sensing and Reconstruction: Based on 3D sensors, we have developed 3D environment sensing and reconstruction technology for robotic applications. Our technology is uniquely capable of real-time dynamic object reconstruction, and multi-modal display rendering visual as well as haptic feedback. The technology has been applied to enhanced telerobotic systems.