Large-scale solar energy development could impact birds, but field surveys of bird carcasses do not provide sufficient data to accurately understand the nature and magnitude of impact. Because data collection does not take place in real time, these methods cannot be used to observe bird behavior around solar panels that could indicate causes of fatality, sources of attraction, and potential benefits to birds.

In collaboration with Argonne’s Strategic Security Sciences and Mathematics and Computer Science divisions, EVS is developing a technology to continuously collect data on avian-solar interactions (e.g., perching, fly-through, and collisions) in a project funded by the U.S. Department of Energy Solar Energy Technologies Office. The system incorporates a computer vision approach supported by machine/deep learning (ML/DL) algorithms and high-definition edge-computing cameras. The automated avian monitoring technology will improve the ability to collect a large volume of avian-solar interaction data to better understand potential avian impacts associated with solar energy facilities. The use of an automated method is the most timely and cost-effective option for collecting a large volume of timely data on avian-solar interactions across large areas.

Data Collection Workflow

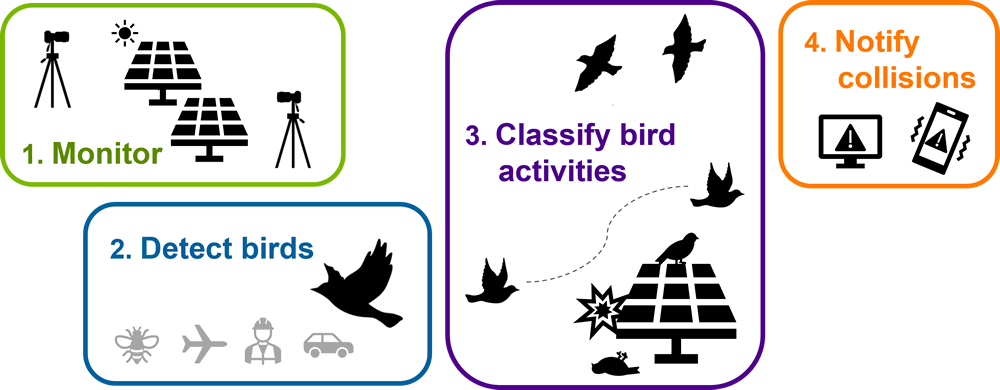

Our avian-solar interaction data collection workflow consists of four actions: Monitor, Detect, Classify, and Notify.

We will seamlessly process video using a ML/DL model inside each of the high-definition, edge-computing cameras to collect observations near real time to report to facility personnel.

- Monitor user-specified areas continuously using high-definition cameras,

- Detect birds immediately they come into the view while disregarding other moving objects,

- Classify bird activities around solar panels based on their movement near real time and,

- Notify bird collision incidents to users shortly after their occurrences as well as provide a summary of bird monitoring.

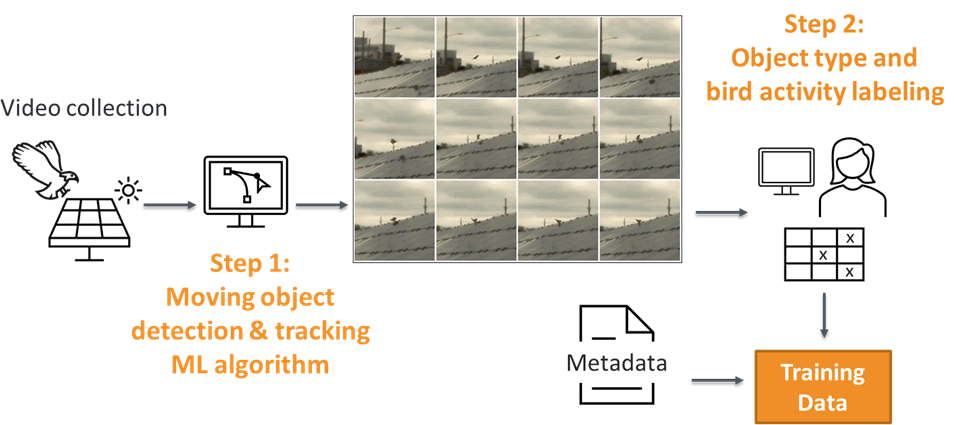

AI-Human Collaboration

A ML/DL model for collecting avian-solar interaction require training data collected under specific settings. Because those data are not available, we are generating custom training data in collaboration with AI. We record 8,000+ hours of daytime video at multiple operational solar energy facilities and run a ML algorithm that detects and tracks moving objects in the video. The algorithm generates an image sequence and its animation for each moving object and record characteristics of object (e.g., trajectory, speed, relative size). Trained human annotators label the object type and activity only if the object is a bird. This AL-human collaboration approach greatly improves the efficiency of training data collection for this unique project.

Type of Bird Activities

Our current technology development focuses on five bird activities: (1) perch on panel, (2) fly‑through between panels, (3) fly over panels, (4) land on ground, and (5) collide with panel.

|

|

|

|

|