These are particularly dangerous because no indication is given during the execution that the data are corrupted – and hence that the results are incorrect.

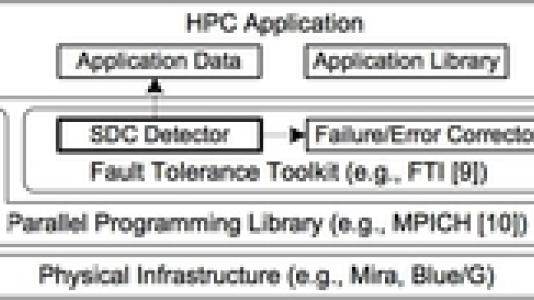

Not unexpectedly, this situation has resulted in numerous attempts to correct and detect SDSs. For the correction of SDCs, checkpoint/restart has been extensively studied for years; but these methods involve high execution overhead. Similarly, replica-based detection, which creates replica processes or messages to detect and correct possible errors, leads to high redundancy of resources. Algorithm-based fault tolerance protects against errors based on analysis of linear algebra or matrix operations; but this approach is specific to particular kernels and applications.

Two researchers from Argonne’s Mathematics and Computer Science Division – Sheng Di, a postdoctoral appointee, and Franck Cappello, a senior computer scientist – have teamed up to create a novel adaptive impact-driven detector designed to protect a large set of high-performance computing applications from influential SDCs.

A key feature in their detection strategy is the idea of regarding only certain, or influential, SDCs. “We argue that one should not blindly enhance the detection ability with regard to SDCs,” said Di. “Instead, our objective is to keep the number of false positives fairly low, while allowing some SDCs to remain – provided that their impact is low enough from the perspective of users.”

Another key feature of their approach is adaptability. The adaptive method was motivated by studying 18 real-world HPC applications, including hydrodynamics, gravity, diffusion, and computational fluid dynamics. Careful characterization showed that the smoothness of the time series data in these applications was closely related to the prediction accuracy. But because the smoothness can have sharp changes at a few time steps and because the data handled by different processes may evolve differently at different time steps, an adaptive method was essential.

The model is also impact-driven. To detect the so-called influential SDCs, the researchers analyzed various factors, including the value range of the state variable, and then formulated an impact error bound. And to control the impact of the SDCs on demand, they introduced an impact error bound ratio – the bound of the relative data change ratio to ensure that the impact of SDCs can be limited to a low level in the whole execution period.

The detector devised by the Argonne researchers uses one-step-ahead prediction, which dynamically predicts the value for each data point at each time step and compares the observed value with a normal value range. The detector then selects a best-fit prediction method that is within the impact error bound and offers high prediction accuracy and low memory cost. If no method meets these requirements, it is gracefully degraded to choose the simplest prediction method with lowest memory cost. The detection range also is dynamically tuned based on false positive events.

The researchers evaluated their innovative method on up to 1,024 cores of the Fusion cluster at Argonne National Laboratory. The results show that the method can detect 80–99.99% of influential SDCs, with memory cost and detection overhead reduced to 15% and 6.3%, respectively.

For the full report, see Sheng Di and Franck Cappello, Adaptive Impact-Driven Detection of Silent Data Corruption for HPC Applications, IEEE Transactions on Parallel and Distributed Systems, Jan. 2016, doi: 10.1109/TPDS.2016.2517639