NWChem is a widely used computational chemistry application suite for simulating chemical and biological systems. Because such simulations often operate on large data sets whose size exceeds the capacity of a single node, resource sharing across nodes becomes necessary. Typically, the Global Arrays (GA) programming model is used for this purpose; and MPI is used to implement GA on various computer platforms.

Unfortunately, most of these MPI implementations lack fully asynchronous communication capabilities. Thread-based models require deploying at least as many background threads as MPI processes. On current MPI implementations where the network is repeatedly polled for incoming messages, this approach can waste half the computing resources, impacting the performance and scalability of intensive computations. Interrupt-based models issue hardware interrupts to awaken a thread in order to process the incoming messages. Handling interrupts on cores that are otherwise devoted to computation causes those cores to stop computing temporarily, resulting in high overhead.

“The effect on performance is especially evident in large water molecule simulations, where computational efficiency may be as low as 50%,” said Min Si, a guest graduate student in the Mathematics and Computer Science Division at Argonne National Laboratory and a doctoral candidate at the University of Tokyo.

She explained that computational efficiency differs from parallel efficiency, a commonly used performance metric, which can be artificially high if the calculation is done by using an inefficient base execution (i.e., performs inefficient communication). Instead, computational efficiency considers the ratio of the computation time to the total execution time, which includes both communication and communication time. A high computational efficiency means efficient parallelism without inefficient communication.

Si and other researchers from Argonne, Intel Labs, and RIKEN (Japan) have now developed “Casper,” a process-based asynchronous progress model that dramatically improves the computation scalability.

With Casper, the user sets a small number of “ghost processes” at the application execution time. These processes then are carved aside from the application processes at the MPI initialization stage. When a process tries to allocate a remotely accessible memory window, Casper intercepts the call and maps that memory into the address space on ghost processes. It then intercepts all RMA operations issued to the user processes on this window and redirects them to the ghost processes. If multiple ghost processes are available on the target node, Casper spreads communication operations among the different ghost processes, thus improving performance.

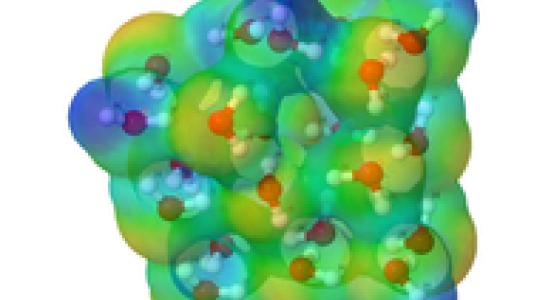

For their simulations of water molecules, the researchers evaluated CCSD(T), a highly accurate method implemented in NWChem. Known as the gold standard for coupled cluster simulations, CCSD(T) nevertheless achieves only 50% computational efficiency on the most time-consuming portion (T) when run at large scale. Comparisons with two thread-based approaches showed Casper to be almost twice as fast, attaining close to 100% computational efficiency on up to 12,288 cores on the “Edison” Cray XC30 supercomputer at the National Energy Research Scientific Computing Center.

“We believe these results show that Casper is a more suitable approach than traditional approaches for GA-based applications on multicore and many-core architectures,” said Si. “By allowing users to flexibly control the number of cores dedicated to asynchronous communication and computation, Casper minimizes the performance impact to GA-based applications, which often are dominated by intensive computation.”

The next step is to study other modules of NWChem besides CCSD(T), which may have different performance characteristics.

This work was presented at the 15th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGrid 2015) in May 2015 in China. The full paper describing this work is available on the web .