Disk-to-disk wide-area file transfers involve many subsystems and tunable application parameters that pose significant challenges for detecting bottlenecks, optimizing systems, and predicting performance. As a result, expensive wide-area transfers are often underutilized, and scientific research is delayed. Performance models can be used to address these challenges, but they have not proved generally usable because of a need for extensive online experiments to characterize subsystems.

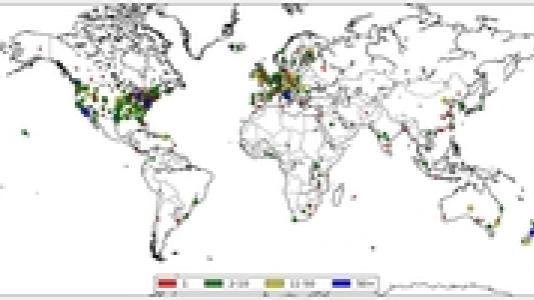

A team of researchers at Argonne National Laboratory have now applied machine learning methods to develop new data transfer performance models using only historical data, thus overcoming the need for online experiments. The models, built by using log data for millions of Globus transfers involving billions of files and hundreds of petabytes, have high explanatory power and provide insight into the behavior of large science data transfers and the factors that affect their behavior.

“Our work is a significant step toward using easily obtainable information sources to explain and improve transfer performance in complex science environments,” said Zhengchun Liu, a postdoctoral researcher in Argonne’s Mathematics and Computer Science Division.

The starting point for this work was Globus log data, from which the researchers formulated a simple analytical model for the maximum achievable end-to-end file transfer rate for a given source and destination. They then extended the model to other endpoints. By reproducing resource load conditions on endpoints during each transfer, the researchers constructed new features that were joined with the log data for training and testing. Regression analysis then was used to explain the relationship between the transfer rate and more than a dozen independent variables, including number of files transferred, number of directories transferred, achieved transfer rates, transfer characteristics, and competing load.

“The results show the importance of creating measures of endpoint load to capture the impact of contention for computer, network interface, and storage system resources. For example, the tests suggest that aggregate performance can be improved by scheduling transfers or reducing concurrency and parallelism,” said Zhengchun.

The models also have considerable predictive power. For more than 30,000 transfers over 30 heavily used source-destination pairs, totaling approximately 2,000 terabytes in 46.6 million files, the median average percentage error – a popular statistical measure of forecasting accuracy – was just 7.8%.

Plans are already under way for improving the transfer service monitoring. For example, the researchers are developing a new version of their models that can include information about non-Globus load on endpoints. Another direction for future work is to explore whether more advanced machine learning methods, such as multiobjective modeling with machine learning, can yield better models.

The work will be presented at the ACM International Symposium on High Performance Parallel and Distributed Computing in Washington, DC, in June 2017. For more information, see the paper by Z. Liu, P. Balaprakash, R. Kettimuthu, and I. Foster, Explaining Wide Area Data Transfer Performance, in IEEE International Symposium on High-performance Parallel and Distributed Computing, Washington, DC, June 2017, on the conference website: https://www.researchgate.net/publication/316318232_Explaining_Wide_Area_Data_Transfer_Performance