The paper titled “Scalable Automatic Differentiation of Multiple Parallel Paradigms through Compiler Augmentation” describes the team’s work on Enzyme, an automatic differentiation (AD) framework that efficiently computes derivatives of applications written in various in parallel computing dialects and frameworks such as MPI, OpenMP, Julia tasks, and RAJA.

SC is a leading international conference on high-performance computing, networking, storage and analysis. This year more than 11,000 attended the meeting, which took place in Dallas, Texas, November 13–18, 2022.

Two of the students have close ties with Argonne. William Moses, a graduate student at MIT and lead author of the award-winning paper, is a U.S. Department of Energy Computational Science Graduate Fellowship alum who did a practicum at Argonne; and Ludger Paehler was a research aide in the MCS division from the Technical University of Munich. The third student is a doctoral candidate at MIT.

“Argonne provides an excellent environment for students to collaborate with researchers in developing and testing software for scientific computing,” said Sri Hari Krishna Narayanan, a computer scientist in Argonne’s MCS division and a co-author of the award-winning paper. “Together with our staff, the students explored how automatic differentiation could be used to expand Enzyme’s ability to differentiate across a wide variety of parallel models and multiple source languages with a single implementation.”

Multiple frameworks and multiple languages mean multiple challenges

Derivatives are at the core of many science, engineering and machine learning applications. They compute the rate of change of a mathematical function’s output with regard to its input. Since parallel computation has become the de facto standard for large-scale computing, users would like to differentiate any parallel framework – the distributed parallelism of MPI, for example, and the shared memory parallelism of OpenMPl – in order to exploit the power of heterogeneous resources. In addition to being difficult to create any derivatives of parallel programs, it is desirable to preserve the original program’s parallelism for the accumulation of derivatives.

The solution presented in the award-winning paper is to shift automatic differentiation to the compiler.

Making a difference with differentiation inside the compiler

According to Moses, who presented the work at SC22, the new approach involved extending the Enzyme AD framework by means of “hooks” for several parallel frameworks representable as a directed acyclic graph of dependencies. Enzyme users then can differentiate parallel programs with many constructs, without needing explicit support. This approach is a clear advantage over other parallel tools that typically must differentiate every construct in the specific language for which they are designed.

The research team also added parallel and AD-specific optimizations that enabled them to keep the same scalability of the original nondifferentiated programs.

To demonstrate the generality of the approach, the team differentiated MPI (distributed parallelism), OpenMP (multicore parallelism), and Julia Tasks (multicore parallelism within a just-in-time management strategy). And to highlight the practicality of their approach, they demonstrated Enzyme’s use in multiple versions of two real-world scientific simulation codes: one involving magnetohydrodynamics and the other involving kernels of a molecular density engine.

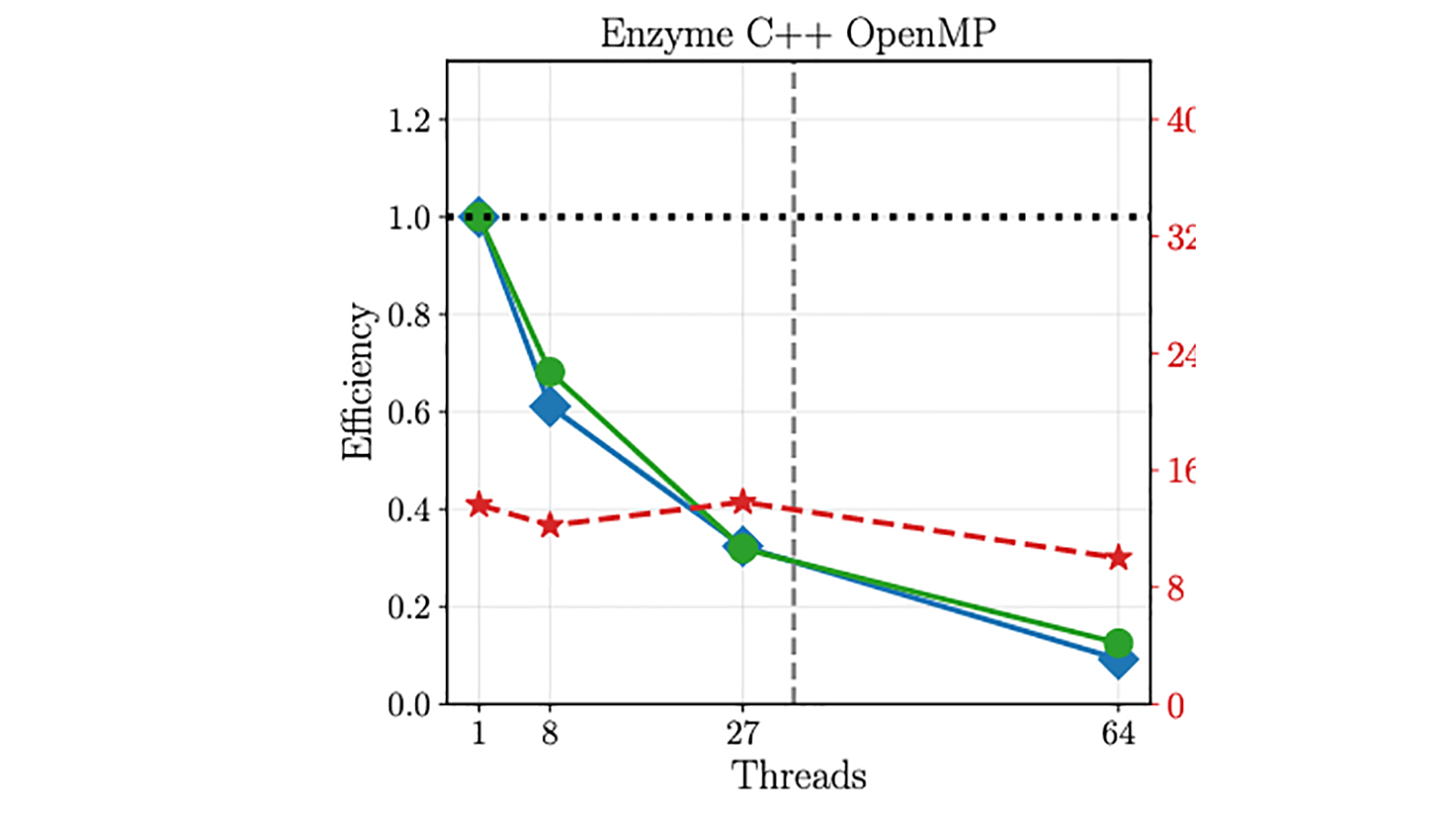

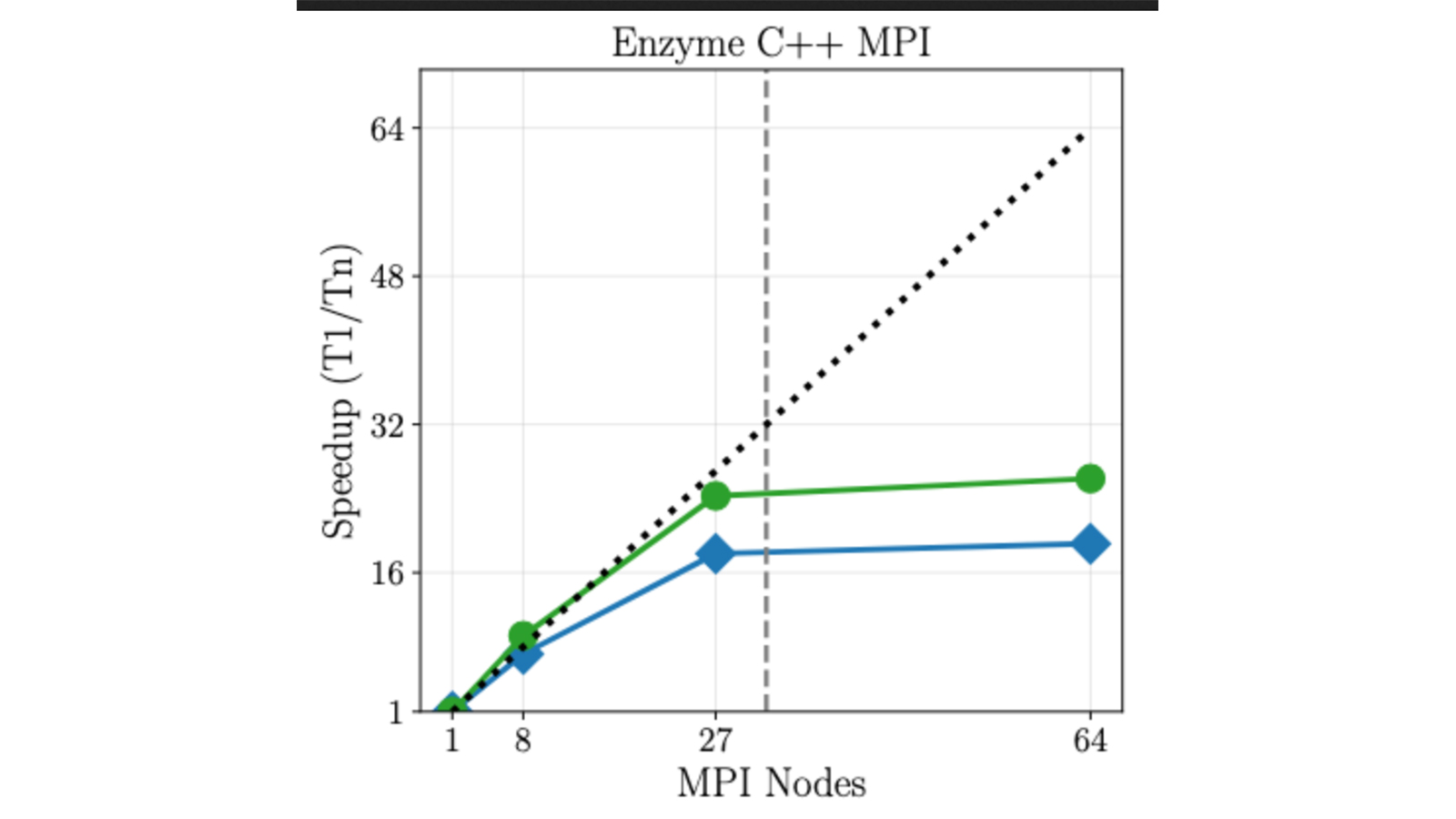

The results in Figures 1 and 2 show that both the weak-scaling and strong-scaling behavior of the derivative computation are equal to or better than that of the original code.

The team emphasized that, in addition to its flexibility, a major benefit of the new approach is that users do not have to use custom adjoint MPI libraries and rewrite their applications.

“With our enhancements of Enzyme to arbitrary parallel frameworks, automatic differentiation of parallel programs is now much more accessible for users,” Narayanan said.

For the award-winning paper, see William Moses, Sri Hari Krishna Narayanan, Ludger Paehler, Valentin Churavy, Michel Schanen, Jan Hueckelheim, Johannes Doerfert, and Paul Hovland, “Scalable Automatic Differentiation of Multiple Parallel Paradigms through Compiler Augmentation,” in SC ’22: Proceedings of the International Conference on High Performance Computing, Networking, Storage and Analysis, November 2022, Article No. 60, pp. 1–18. To view the presentation, click here.