These mathematical functions are important in calculus, geometry, physics and many other applications. However, the advent of statistical modeling and machine learning is what earned special functions renewed attention as building blocks of many Gaussian processes.

Special functions have various definitions and often bear the name of the scientist who identified the function and promoted its use. For example, the Bessel function recognizes the role of the German astronomer Friedrich Wilhelm Bessel in generalizing the function’s properties.

Although Argonne cannot boast any eponymous special function, it does have the distinction of pioneering the development of special function software libraries beginning in the early 1970s.

A bit of history

Spearheading these early efforts was William J. (“Jim”) Cody, a scientist in the Applied Mathematics Division (later the Mathematics and Computer Science division) at Argonne. Recognizing that the special function routines at the time were inefficient, lacked accuracy, and often were not portable across computing platforms, Cody set about designing high-quality software packages of functions. The results were FUNPACK, which met the requirements of reliability and robustness but not portability, and SPECFUN, which traded the last bit of accuracy to get portability, while retaining the overall robustness of FUNPACK. The packages were quickly adopted worldwide..

A new look at a few special functions

Motivated by advances in statistics and machine learning, Argonne researchers recently have picked up the baton and addressed an ongoing problem involving a few special functions: robust implementations for derivatives. Numerical differentiation using simple finite differences of function evaluations is well known to be unstable. A reliable substitute for computing derivatives is automatic differentiation (AD), another Argonne proud development. Special functions, however, are so special that their tailored numerical treatment resisted straightforward AD techniques. Argonne asked: What is the best path forward? Should we revisit previous choices made by libraries like SPECFUN and select different expressions, should we change these expressions entirely and replace them with neural network models to use AD seamlessly or should we use different flavors of differentiation like the complex step method? And Argonne answered: Let’s try it all!

Automatic differentiation

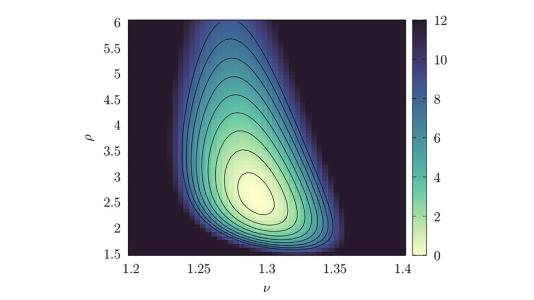

Researchers from Argonne’s Mathematics and Computer Science (MCS) division (O. Marin and M. Schanen) collaborated with researchers from the Department of Statistics at Rutgers University (C. J. Geoga and M. L. Stein) on a study of the Matérn covariance function, a special function used to define the statistical covariance between two points. This popular covariance function contains the modified Bessel function of the second kind. Estimating the smoothness parameter Kv of this function efficiently and accurately can be nontrivial (see Fig. 1); and although existing special functions libraries are fast, they typically use finite differences, leaving Kv as the dominant cost in evaluating the entire Matérn covariance function. The Argonne-Rutgers team instead introduced a new implementation of the smoothness parameter that provides high-order derivatives via automatic differentiation.

Even using dedicated expressions, special functions can be challenging for AD, demanding domain-specific branching programs to preserve derivatives throughout the entire domain.

“Simpler or more direct methods are not available, and hence applying AD transformations to existing special function libraries is unlikely to provide correct derivatives,” said Marin, an applied mathematics specialist in Argonne’s MCS division. “With our new implementation, however, users can easily extract derivative information from programs, and the resulting derivatives are significantly faster and more accurate than using finite differences.”

The new approach and experimental results are detailed in the recent paper by C. J. Geoga, O Marin, M. Schanen, and M. L. Stein titled “Fitting Matérn smoothness parameters using automatic differentiation,” https://arxiv.org/abs/2201.00090.

Derivatives via the complex step method

In a prerequisite study using dual-types AD for the Matérn covariance, Marin and her colleagues Geoga and Schanen assessed state-of-the art strategies for differentiating Bessel functions with respect to order.

“Derivatives with respect to order are intricate both mathematically and numerically,” said Marin. “The function is numerically cumbersome and prone to large errors under classical differentiation strategies.”

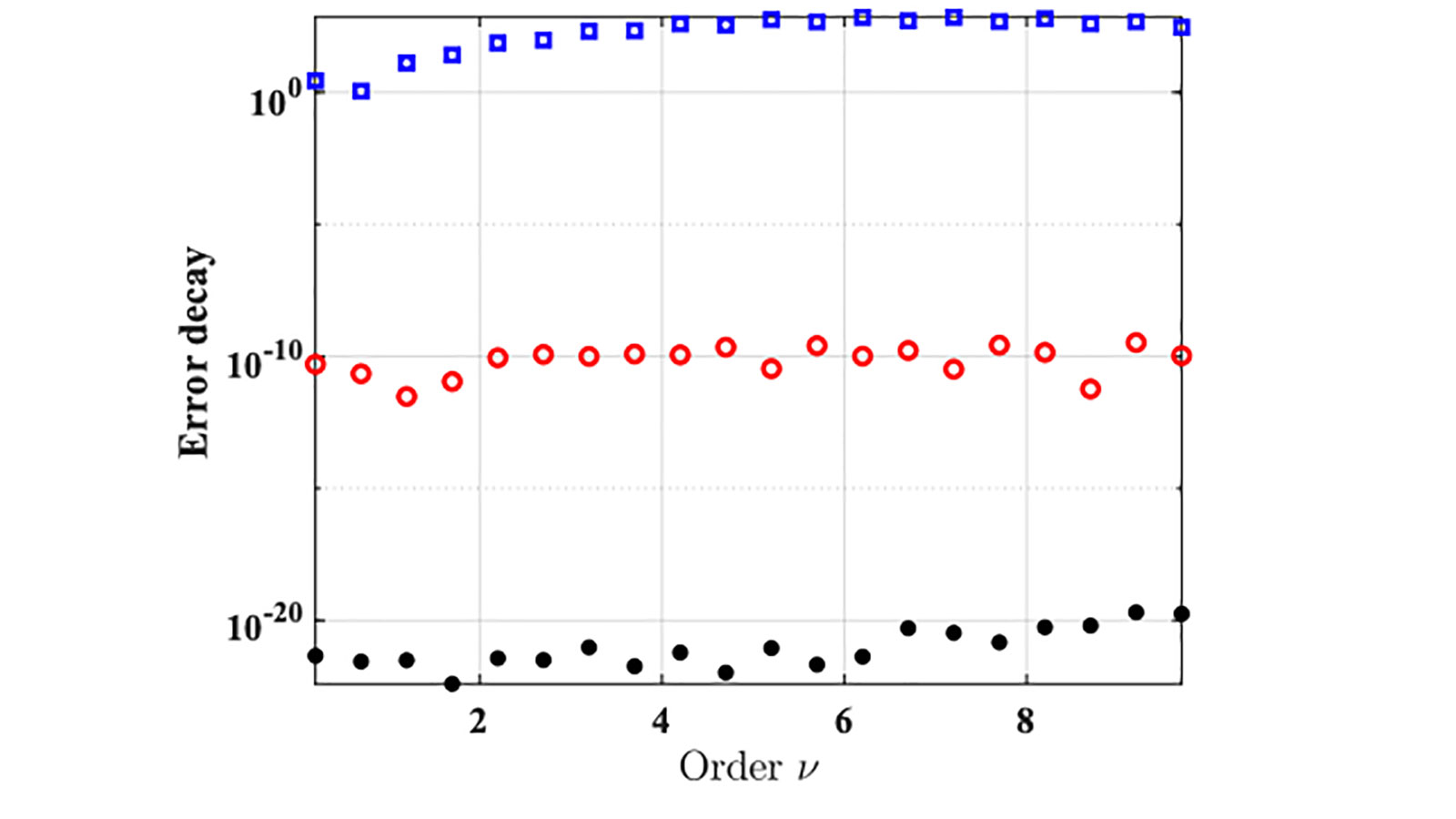

The solution Marin and her colleagues devised involves evaluating a function in complex space and then taking finite differences only in the complex coordinates, thereby removing the problematic round-off errors. The researchers identified series expansions that can be easily evaluated using the so-called complex step method. For increasingly large arguments, this method was shown to be more accurate and more computationally efficient than traditional second-order finite differences (see Fig. 2).

Moreover, the same formulas can be expanded for the AD approach to solve the difficulty of taking derivatives of special functions with respect to order and not only argument.

Their work is reported in the paper by O. Marin, C. Geoga, and M. Schanen titled “On automatic differentiation for the Matérn covariance,” presented at the 35th conference on Neural Information Processing Systems (NeurIPS 2021), https://arxiv.org/abs/2201.00262.

Neural networks

In yet another study of special functions, Marin and Y. Liu, who interned with the MCS division under funding from the National Science Foundation, explored a new approach exploiting the power of neural networks.

“A particularly problematic aspect of special functions is that most expressions are derived analytically but that using analytical formulas with numerical evaluations may lead to precision mismatches,” said Marin. “Special function neural network (SFNN) models, in contrast, can provide reliable derivative computations.”

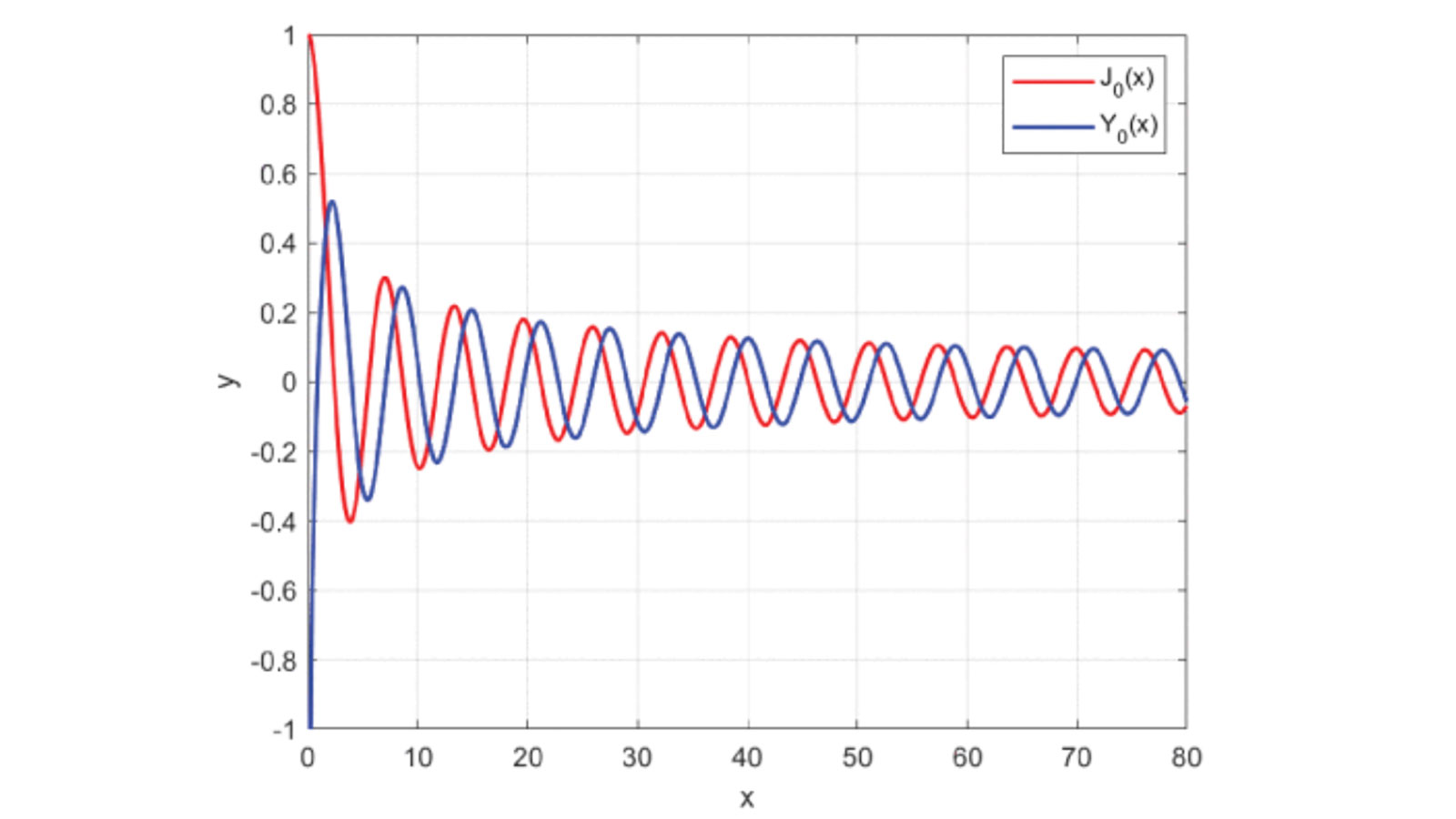

For this study the researchers chose two special functions: Bessel functions of the first and second kind. Although these functions are widely used, they still pose numerical challenges. For example, each Bessel function is oscillatory and has a range of solutions on its interval of definition (see Fig. 3).

With the SFNN model representation, the researchers were able to obtain a good approximation of the analytical derivative, with an error of less than one order of magnitude.The study shows that intervals traditionally requiring different asymptotic expansions can be treated identically by using neural network models.

For the full paper describing this study, see Yuzhen Liu and Oana Marin, “Special function neural network (SFNN) models,” IEEE International Conference on Cluster Computing (CLUSTER), 2021, pp. 680-685, https://ieeexplore.ieee.org/document/9556002

Better implementations

Special functions continue to be of interest, and new special functions continue to be identified. Emphasized by three studies described here, however, is not new functions but new approaches to enabling robust derivatives – ones that extend current special function implementations to assure accuracy, robustness and portability to derivative computations.

This research was funded by the U.S. Department of Energy, Office of Science, Office of Advanced Scientific Computing Research. The research for Liu was supported in part by an appointment with the National Science Foundation Mathematical Sciences Graduate Internship Program sponsored by the NSF Division of Mathematical Sciences.