Cosmology is currently in one of its most scientifically exciting phases. Two decades of surveying the sky have culminated in the celebrated Cosmological Standard Model. Yet, two of the model’s key pillars, dark matter and dark energy — together accounting for 95% of the mass-energy of the universe — remain mysterious. Researchers at the U.S. Department of Energy’s (DOE) Argonne National Laboratory, as part of a collaborative project with five other national laboratories, have now received funding from the DOE Office of Science to tackle this mystery.

Dark matter refers to matter that neither emits, absorbs, or reflects light but does exert a gravitational effect on itself and on surrounding atomic or “normal” matter. Dark energy refers to a mysterious new substance, or perhaps a modification to Einstein’s theory of gravity, that is currently driving an accelerated expansion of the Universe, pulling galaxies apart from each other at an ever-increasing rate. The existence of dark matter and dark energy poses deep fundamental questions that currently cannot be answered.

To help address these questions, scientists from Argonne’s High Energy Physics (HEP) and Mathematics and Computer Science (MCS) divisions will lead a three-year project titled “Computation-Driven Discovery for the Dark Universe.” The key scientific aim is to establish a computation-based discovery capability for critical cosmological probes by exploiting next-generation high-performance computing architectures.

“The time is ideal for such an ambitious project,” said Salman Habib, the project director and a member of Argonne’s HEP and MCS divisions. “Driven by advances in semiconductor technology, next-generation optical sky surveys represent an order of magnitude improvement over current cosmological constraints – a major step forward in investigating the ‘Dark Universe.’ And high-performance computers – supported by breakthroughs in algorithms and computer software – are providing a matching simulation capability, enabling researchers to analyze that data, extract insights, and make precision predictions for these observations.”

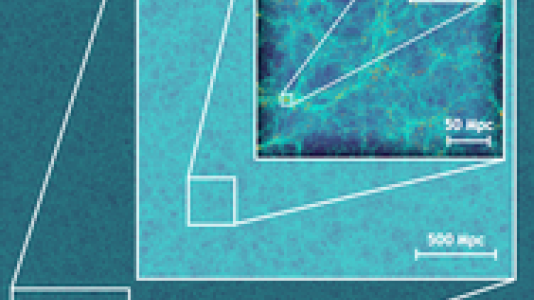

The cosmological research will focus on precision probes of the large-scale distribution of matter in the Universe – for example, statistics of the distribution of galaxies in space and mass mapping via weak gravitational lensing, the bending of light from a distant source caused by an intervening mass distribution. These probes provide crucial information related to dark energy and dark matter, primordial density fluctuations, and even the contribution of neutrinos to the overall composition of the Universe.

The computational effort will target three fronts: architecture-aware numerical methods for gravity, hydrodynamics, and associated data analyses; programming paradigms for grid/particle hybrid algorithms; and software designed to exploit many-core and many-thread environments, as well as heterogeneous systems.

“Interpreting future observations is impossible without a simulation effort fully as revolutionary as the new surveys. To keep pace with the unprecedented precision and resolution of the observations will require innovative multiphysics and multiscale capabilities in order to address complex physical processes,” said Katrin Heitmann, the Argonne project PI, also from Argonne’s HEP and MCS divisions.

The Argonne researchers will use a new framework they have developed called HACC, short for Hardware/Hybrid Accelerated Cosmology Codes. HACC is designed for extreme performance, but with great flexibility in mind, combining a variety of programming models that make it easily adaptable to different platforms. HACC is a Gordon Bell Award finalist for 2012. HACC’s performance optimization on the IBM Blue Gene/Q was carried out in collaboration with the Argonne Leadership Computing Facility’s Performance Engineering group.

“HACC is the first – and currently the only – large-scale cosmology code suite worldwide that can run at scale (and beyond) on all available supercomputer architectures, including Cell-accelerated hardware, conventional clusters as well as clusters enhanced by graphics processing units (GPUs), the IBM Blue Gene/P and Blue Gene/Q systems, and the Intel MIC architecture,” said Habib.

Along with HACC’s simulation framework, the Argonne researchers have developed a matched analysis system, for high-performance parallel in situ and postprocessing analysis, and a cosmic calibration framework, for interpolation over high-dimensional spaces by using sophisticated sampling techniques.

“These powerful computational ‘tools of discovery’ will be focused on developing and sharpening cosmological probes in the domains of optical galaxy surveys, both imaging and spectroscopic,” said Tom Peterka of Argonne’s MCS division. Peterka will play a lead role in some of the computer science research tasks within the project.

Essential to the success of these tools is access to DOE’s leadership-class computer systems. “We will make full use of the systems at the Argonne Leadership Computing Facility, the Oak Ridge Leadership Computing Facility, and the National Energy Research Scientific Computing Center at Lawrence Berkeley National Laboratory,” said Peterka.

The five other national laboratories participating in the project are Brookhaven National Laboratory, Fermi National Accelerator Laboratory, Lawrence Berkeley National Laboratory, Los Alamos National Laboratory, and SLAC National Accelerator Laboratory. The project is funded under the DOE Office of Science’s Scientific Discovery through Advanced Computing (SciDAC) program. To achieve the aims of the project, the researchers will also work closely with the SciDAC Institutes.