Yet despite these benefits, the software used in simulation has not received the same attention as experimental facilities. Whereas scientists readily recognize the importance of having superior laboratory instruments, a similar appreciation about software quality has been slow to emerge.

One can understand that domain scientists might question spending a lot of predevelopment time on formulating a detailed software plan – other than perhaps outlining what they need the code to accomplish on the particular computer hardware they will be using. And one can understand why project funders might balk at providing money both for the actual application and for the software behind that application.

But, Anshu Dubey, a computational scientist in the Mathematics and Computer Science Division at Argonne National Laboratory, strongly believes that scientific software deserves better. In her article titled “Insights from the Software Design of a Multiphysics Multicomponent Scientific Code,” which appeared in Computing in Science and Engineering, Dubey readily acknowledged that devising a good software design methodology takes time – time to understand the requirements, constraints and challenges of the application before any code writing is undertaken. She emphasized, however, that the long-term rewards are well worth the upfront costs.

Dubey supported her position by describing what she has learned from her experience with the multiphysics multicomponent software project FLASH.

“FLASH’s return on investment has been outstanding,” Dubey said. Indeed, although originally designed for astrophysics, it has been easily customized for other domains as well, including high energy density physics and fluid structure interactions. Moreover, it has successfully adapted to changes in high-performance computing, such as the emergence of graphics processing units. “Since its initial release in 2000, FLASH has repeatedly proved its value, not only in terms of the quality of science, but also in terms of scientific productivity,” Dubey said.

Two branches, two approaches

Scientific code have two types of components: numerics and infrastructure. For long-lived flexible scientific code, Dubey argues, these two types should be treated differently. Components implementing the model should be as close to a plug-and-play design as feasible, adapting rapidly to any changes in scientific understanding. In sharp contrast, the infrastructure part of the code requires a thorough understanding of the design constraints imposed by the algorithms used in the science models and a careful prototyping and evaluation of the design space.

“That doesn’t come cheap,” Dubey said. “But a well-designed software infrastructure should not need major overhaul through several generations of computing platforms.

A balancing act

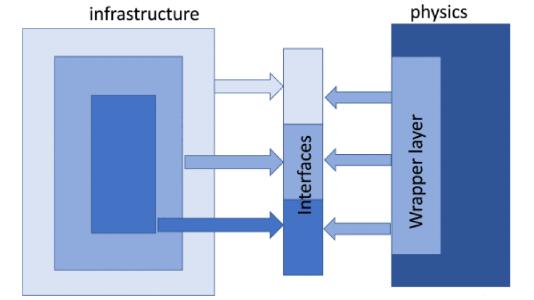

According to Dubey, the entire simulation design process can be viewed as a balancing act between competing issues. And one way to balance conflicting requirements is to design for hierarchical access to the infrastructure, exposing functionality at different levels of granularity; see Fig. 1. For example, the developer of a physics module may opt for more transparency, interacting with the infrastructure at a superficial level; or, the developer may prefer to have the module interact with the infrastructure at a deeper level for greater control.

A sound investment

The increasing heterogeneity of computing platforms illustrates the importance of a well-thought-out software design. Accelerators, for example, are becoming ubiquitous, even in mid-sized clusters; and these accelerators differ from one vendor to another and from one generation to the next. Is it time to change the software design process?

In answering this question, Dubey drew on her experience in developing Flash-X, the new exascale code derived from FLASH.

“My colleagues and I have found the layered interface design sufficient for even such a dramatic refactoring of the FLASH architecture,” Dubey said. “Arguably, the details in Flash-X get more complex, but the basic design principles – modularity, flexibility and separation of concerns – still hold. Investment in software architecture design remains a wise investment indeed.”

For more ideas and lessons learned about software design, see Anshu Dubey, “Insights from the Software Design of a Multiphysics Multicomponent Scientific Code,” Computing in Science & Engineering 23, no. 3, pp. 92–95, 2021. 10.1109/MCSE.2021.3069343