The increasing volumes of data produced by high-performance computing applications have raised a critical issue regarding storage, transfer and analysis – how to reduce the data size while ensuring acceptable data fidelity. The lossy data compression model SZ is widely acknowledged as one of the most effective means for achieving this objective. However, SZ suffers from low compression throughput. For example, SZ’s throughput on a single CPU core is tens to hundreds of megabytes per second, far from enough to match high data acquisition rates (up to 250 GB/s) on some advanced instruments such as the Linac Coherent Light Source-II.

Field programmable gate array (FPGA) accelerators offer many advantages, including configurability, high energy efficiency, low latency and external connectivity. Hence, they are suitable for real-time processing such as streaming big data analytics.

Inspired by FPGA advantages, a team of researchers from Argonne National Laboratory, the University of Alabama, the University of California, Riverside and the University of North Carolina at Charlotte have developed a co-design framework for SZ, called waveSZ.

“Few studies have been made of FPGA-accelerated lossy compression for scientific data,” said Sheng Di, a computer scientist in Argonne’s Mathematics and Computer Science Division. He noted that the principal existing model based on SZ and FPGAs can lead to low prediction accuracy on multidimensional datasets. “With waveSZ, we adopt a wavefront memory layout to reorganize the data access order so that SZ can be applied to FPGAs without this degradation,” Di said.

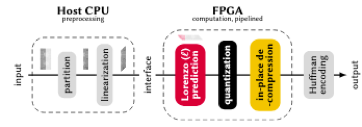

WaveSZ is a hardware-algorithm co-design. Specifically, the preprocessing for the wavefront memory layout is done on the host CPU, and the pipelined prediction, linear-scaling quantization and decompression are done on the FPGA; see Fig. 1.

Tests with waveSZ on three real-world datasets involving climate, hurricane, and cosmology simulations showed that waveSZ can improve the data compression ratio by 2.1 times and the throughput by 8.3 times on average, compared with the existing state-of-the-art FPGA-based SZ model.

The work is documented in the paper by J. Tiann, S. Di, C. Zhang, X. Liang, S. Jim, D. Cheng, D. Tao, and F. Cappello, “waveSZ: a hardware-algorithm co-design of efficient lossy compression for scientific data,” and was presented at the Principles and Practice of Parallel Programming conference on Monday, Feb. 24, in San Diego.