It seems as though we are inundated, of late, with ever-more news, ads, and articles — like this one — on the “rise of artificial intelligence (AI).” A foreboding phrase in the mid to latter part of the 20th century, it often referred to the rise of robots and their eventual superiority and conquest of humans.

Now, for most of us, it means we can talk to and expect a response from our phones, televisions and personal digital assistant devices. Cars now drive themselves to some degree, and we can meet or game with friends in virtual spaces.

“Artificial intelligence has triggered a significant transformation of human society that involves a stronger human-machine partnership.” - Marius Stan, Program Lead, Intelligent Materials Design

More importantly, AI is playing a significant role in defining new research methods that bring us to resolution or discovery more quickly than ever before. It allows us to literally walk among molecules to investigate components that might benefit medicine or manufacturing, or dramatically reduce the time it would take an army of humans to extract very specific data from tens of thousands of journals and technical papers.

The U.S. Department of Energy’s (DOE) Argonne National Laboratory finds itself using or developing many of these technologies as AI extends to new branches of science and technology, sprouting new buds that represent various facets of human function and cognition — from learning to language processing to visual recognition.

And yes, there are robots.

Bringing the players together

“Artificial intelligence has triggered a significant transformation of human society that involves a stronger human-machine partnership,” said Marius Stan, a computational scientist leading intelligent materials design in Argonne’s Applied Materials division, senior fellow at the University of Chicago’s Computation Institute and a senior fellow of the Northwestern-Argonne Institute for Science and Engineering.

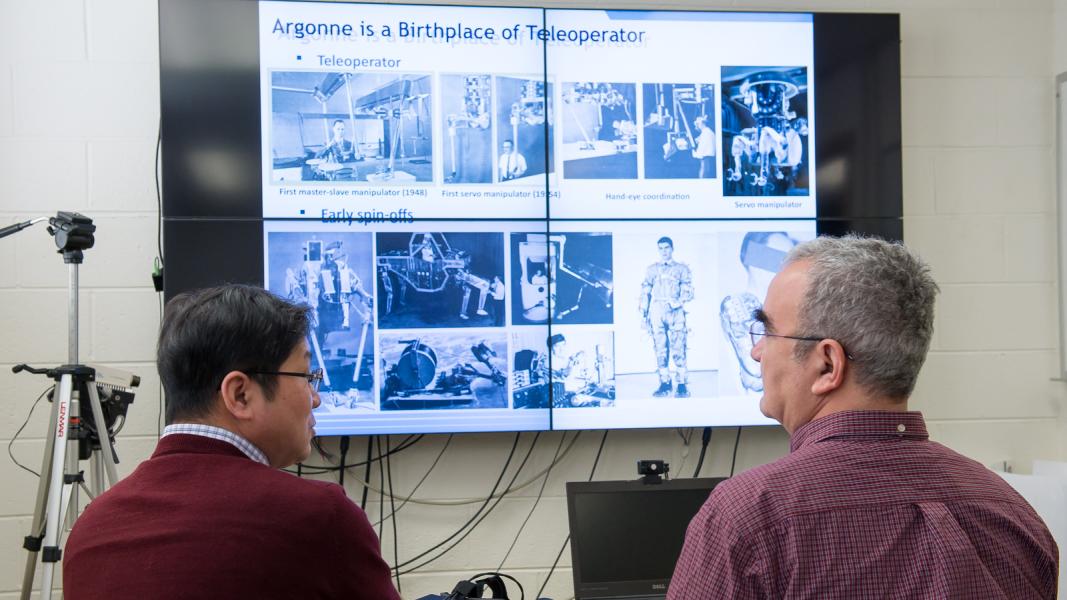

In November, Stan led Argonne’s first workshop on the subject, which brought together researchers from disparate areas of study who are finding common ground in AI applications, such as neural networks and augmented reality. The idea, he said, was to create a forum for discussing Argonne’s AI capabilities, expertise and project ideas.

Stan’s own work in revealing and exploiting the secrets of material properties is increasingly enhanced by several components of AI. For example, he is using machine learning — the construction of algorithms and software that can learn from data and progressively improve predictions of new data — to optimize computer models of complex material properties. The intent is to employ these models to discover and design new materials for a variety of applications in energy production, energy storage and electronics.

Immersive visualization is another component of AI that is providing previously unobtainable access to material characteristics; such access may, in turn, bolster the machine-learning process. An advanced form of virtual reality, immersive visualization allows a user, wearing a pair of specialized goggles, to move within 3-D models of complex systems.

Researchers can comprehensively visualize the atomic structure of a material in greater detail than ever before to understand, for example, the way atoms interact, or to observe the formation and evolution of defects inside materials, explained Stan.

Argonne houses the Studio for Augmented Collaboration, where researchers from across the laboratory can apply the newest visualization techniques to find long-sought-after answers or solve unique new problems.

“The studio exists because we genuinely believe that the complex systems we’re studying cannot be fully understood and explored by just one person sitting at a desk using traditional tools,” said John Murphy, an anthropologist and computational social scientist with the Decision and Infrastructure Sciences division and a fellow at the University of Chicago’s Computation Institute.

Where Stan works on physical systems, Murphy’s research focuses on the dynamics of social systems. And while they apply them to quite different subject matter, both use similar forms of visualization, machine learning and natural language processing (NLP), another component of AI.

An intelligent software, NLP can review, analyze and summarize information about a given topic from journal articles, reports and other publications. Voice-activated technologies and on-the-fly language translators are just a few of the commercially available examples.

Murphy spoke about NLP at the AI workshop, framing his conversation around a water management project to which he applied the process. Focusing on four cities in the West, his group used a form of NLP called “named entity recognition” to extract the names of agencies or institutions involved in managing water. The objective of the research, funded by the National Science Foundation, was to determine the complexity of and interdependencies among water management structures in these areas.

Murphy’s team devised an algorithm to examine a large number of newspaper articles from the area and automatically generate a list of the water management institutions. By eliminating specific types of words (e.g., people’s names, place names and roads), they were able to search for more targeted terminology like “water” and institution names.

“When you see two institutions mentioned in the same article, you can start to put together a network of connected institutions, and you get a different network structure depending on how complicated the water management scenario is in a particular area,” Murphy explained.

“The interesting part for us was that we were able to capture a statistic about the history of a place just by analyzing the language that was being used in public newspapers.”

Connecting the dots

Their shared interests in AI have led Murphy and Stan to evolve the studio, with hopes of incorporating as much AI technology as available into an integrated system geared toward collaborative research.

Their current work with voice-driven visualization in the context of virtual reality could lead to a machine-assisted research technology, similar to a Google Assistant or a Siri, which would truly transform the laboratory into a collaborative space for AI-based research.

Acting as a partner in the research process, a voice-activated research assistant could track the progress of a research problem, analyze information drawn from discussions and answer questions about or possibly propose new options to solve that problem.

“So the studio becomes a workspace where you meet with colleagues who are bringing different perspectives, different data and different expertise to the problem” said Murphy. “Such a space would provide better connections to databases and different opportunities to visualize multiple data sets together, whether it’s using virtual reality or augmented reality. And it’s the product of these things — with the assistance of AI — that would allow you to do science better and faster.”

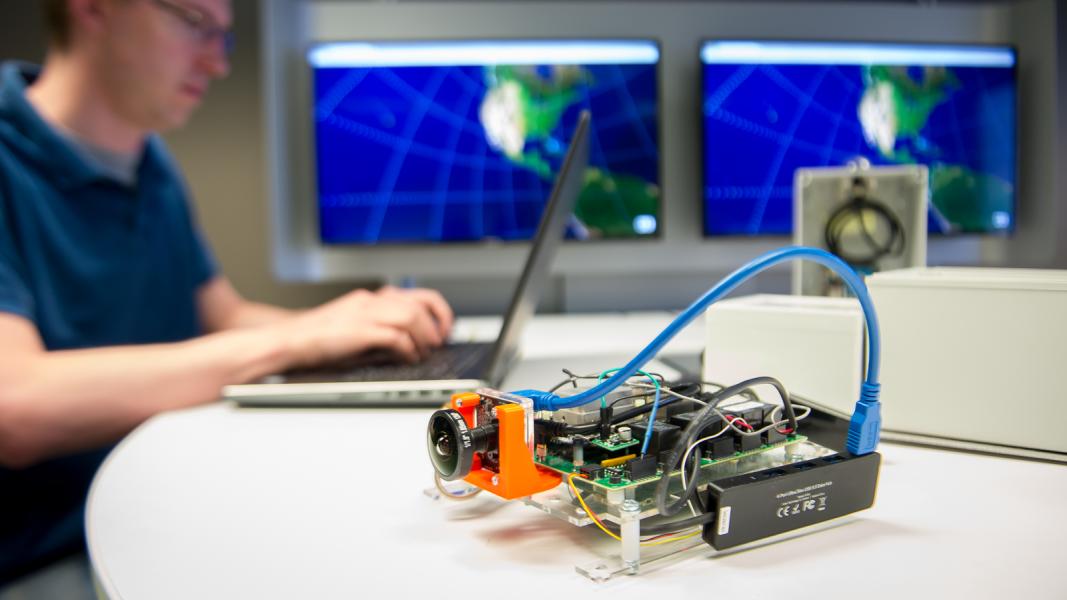

The new studio has been designed and furnished to be more functional and provide alternative configurations for equipment. That includes new visualization tools, an intelligent software-operated 3-D printer and a deep learning machine for real-time or near-real-time optimization of model and process parameters.

Among the equipment they have yet to acquire is a robot. That, it seems, is in the offing.

During the workshop, Stan produced an image that charted the growing complex of AI components used in research globally. He found, to his surprise, that his work and that of the workshop attendees covers many AI components. Until that discussion, he hadn’t fully realized the extent to which AI had proliferated across the laboratory. It was only during the event that he discovered that Argonne colleagues are working on additional AI components that filled the gaps in his chart, including robots.

“We will invite the others to present at the next workshop,” he promises.

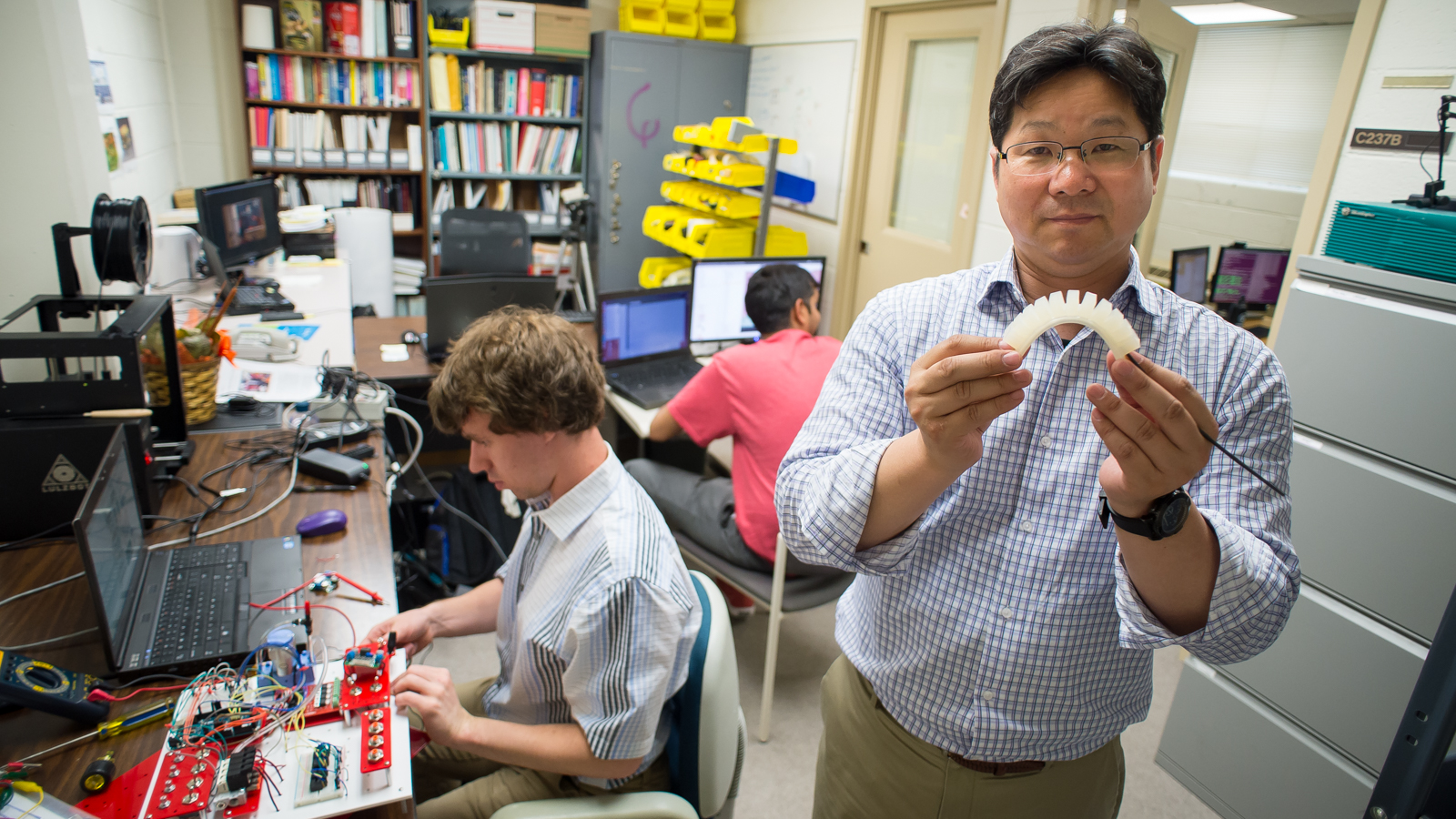

Among those “others” is Young Soo Park, a senior mechanical engineer in Argonne’s Advanced Materials division and senior fellow at the University of Chicago’s Computation Institute. In 1999, Park re-established Argonne’s robotics program, which had developed the first teleoperator or master/slave manipulator — essentially a manually controlled robotic arm — in 1948.

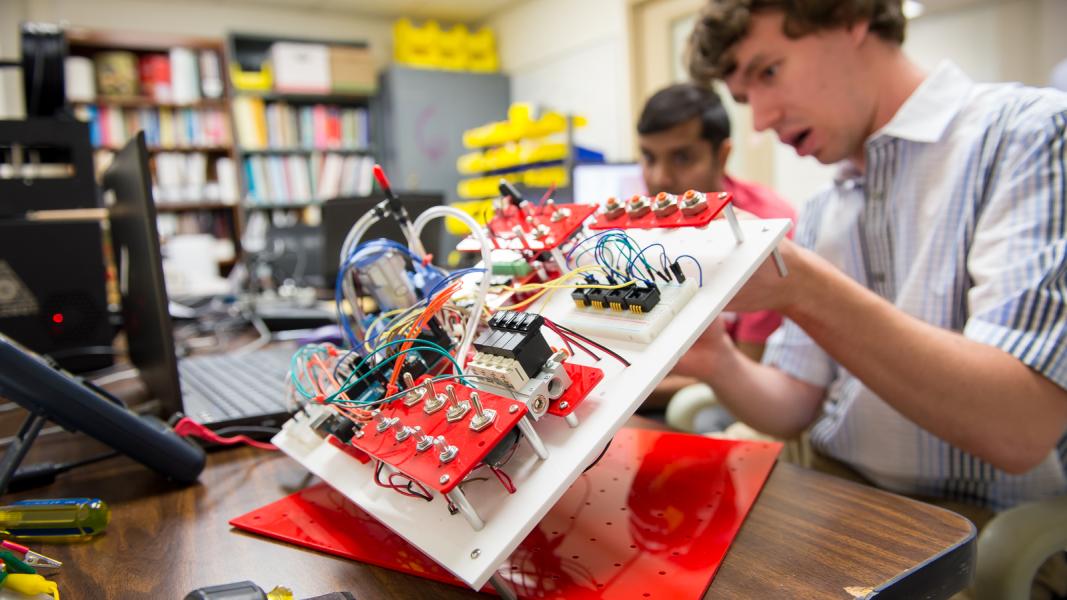

A roboticist for 30 years, Park said the early perception of AI was that it never worked. Ironically, breakthroughs in other areas of AI, like machine learning and deep neural networks, are allowing for more complex capabilities in both the human-robot interface and degrees of autonomy in robots.

Park’s research is in telerobotics or teleautonomy, a combination of remotely controlled and semi-autonomous robotics systems that require some level of human intervention. Much of that work revolves around the deployment of robots in the deactivation and decommissioning of nuclear power plants.

These are not the fluid, speaking, semi-emotive humanoids of science fiction movies like Blade Runner and Ex Machina. For the type of work in which Park’s robots would be engaged, simple and rugged works best.

Earlier attempts at greater automation created overly complex and vulnerable robots that proved ineffective in the field. Park determined that the robots required more operator aid, which translated into a merging of AI technologies.

Machine learning and visualization techniques like augmented reality help impart human-like behaviors that allow the robots to conduct essential autonomous jobs, like maneuvering in a defined space or adjusting the pressure required to dismantle or move an object.

But whether it’s robots or self-driving cars, Park is not an advocate of fully autonomous AI.

“My philosophy leans more toward human-robot symbiosis,” he said. “I think we should always get involved in robot operation to make sure they safely, efficiently and reliably fit in with human society.”

He and Stan met recently to discuss Park’s work and ways in which the two might collaborate. A restructuring within Argonne may place them both in the same division, potentially encouraging that collaboration. In the meantime, they already have broached the possibility of introducing one of Park’s robotic devices into the new AI lab.

“The fact that he is using elements of artificial intelligence to control the robots is very exciting to me,” exclaims Stan. “And it is important for Argonne, as well. There is an element of the lab that is driven by the evolution of science and technology and engineering. Many research centers are getting involved in this evolution of artificial intelligence and we want to be at the forefront.”

Stan’s research is funded by Argonne’s Laboratory-Directed Research and Development (LDRD) program and the National Institute of Standards in Technology (NIST)-funded CHiMaD project (via his joint appointment with Northwestern University). John Murphy’s immersive visualization work is funded by the National Science Foundation, and Young Soo Park is supported by funding from the DOE Offices of Environmental Management and Nuclear Energy and the Korea Atomic Energy Research Institute (KAERI) sponsored partnership program.